Building a chatbot

AI now is almost everywhere generating text, pictures, presentations and even videos. Chatbots before LLMs where based on patterns and prerecorded responses. While cost effective and easy they are limited in the responses they provides. They can't respond to questions outside their training data. or holding a conversation.

Despite their higher cost and the less control of the output LLMs have are being used in a lot of chatbot.

One of the first things to think about is how to control the output of that system. But when dealing with these black boxes it might have a reverse effect. like an AI refusing to show "dangerous" C++ code to underage kids. Or a one that might give some really creepy answers given the right prompt.

While expressing you intent with code is straightforward. using text can get confusing with either the AI or the user finding ways around.

Data and AI

Fine-tuning an AI each time some data change is quite slow and expensive. also we lose the source of the data that can help the user access the accuracy of the response.

It's useful for changing the tone or behavior of the AI instead. Think of giving someone a way too long training instead of a few books he can reference. This last approach is the one we are going to use.

Retrieval-augmented generation

Retrieval-augmented generation or RAG is helping the AI with some external data.

Giving all your documents to the AI with each question is not going to works. that leave us with two important questions:

-

How can we find the most relevant data ?

-

How can we get this data into the AI ?

For the first question if forget about the AI side for a moment. the answer is already in front of our eyes, search engines.

The search engines we use every day already do the exact thing. they tries to find the most relevant results (documents) to our search query.

Some AIs integrate existing ones directly like Bing chat, but we still need to build to be able to use our own data.

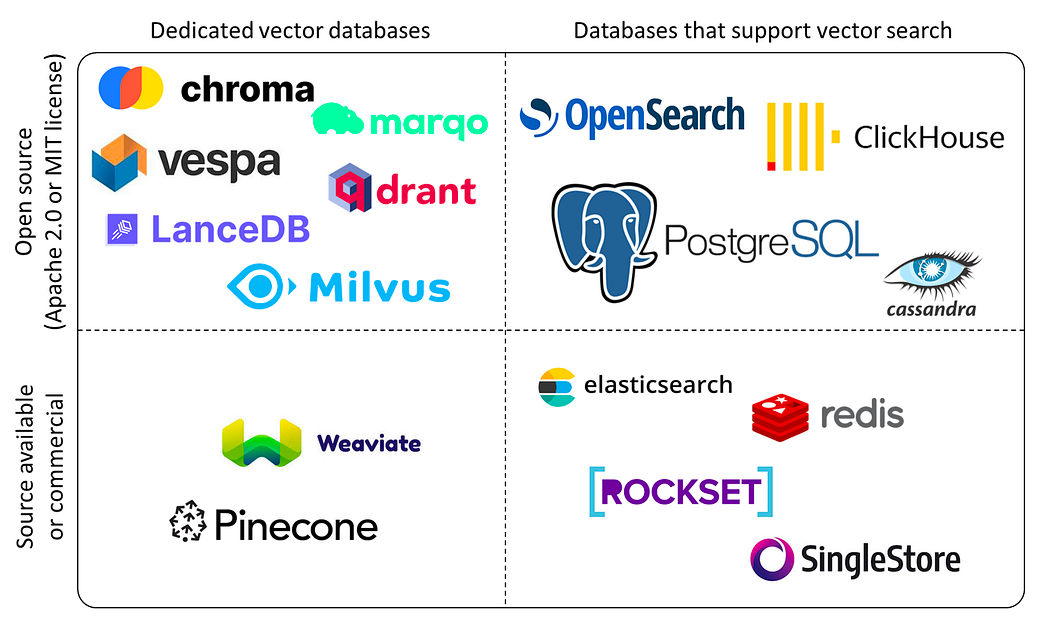

Enter vector databases which we can divide into two categories:

Dedicated, and ones that additionally support vector search

A general purpose database that support vector search makes sense if you already use it. While a Native one will be more tailored and more isolated than the rest of your data.

For this demo we will use Chroma DB an open-source AI-native embedding database.

Now that we settled on how to store and retrieve the data, how can we extract it in the first place.

A knowledge base for a company or similar will most likely contain PDFs. which are not trivial to parse.

AI and PDFs

PDFs while being great for preserving for a file look across many devices. It can be hard to parse in an automated fashion.

A few problems that you might encounter are:

-

PDFs with image only pages.

-

PDFs with one text block for each character or word

-

PDFs with weird structures

Besides extracting only the text form PDFs will result in loss of the structure of the text (Heading, Paragraphs, Tables, etc...)

No PDF parsing library is perfect, the best you can do is try multiple ones and compare the results.

One library built to address this issue is LLM Sherpa. It provides a way to convert the PDF into chunks while keeping the structure data.

Now that we established all the different technologies to use. The next post will be about how we can chain all of that together.